Smiley Sabotage: The AI Vulnerability from Emojis

Emojis, a staple in digital communication, are now being recognized as tools for manipulating AI systems. Researchers have found that hidden instructions embedded within emojis can bypass AI safety checks, leading to unexpected and dangerous outcomes. This vulnerability raises significant concerns about the security of AI in sensitive sectors, highlighting the need for enhanced AI safety measures.

Token Segmentation Bias and AI Manipulation

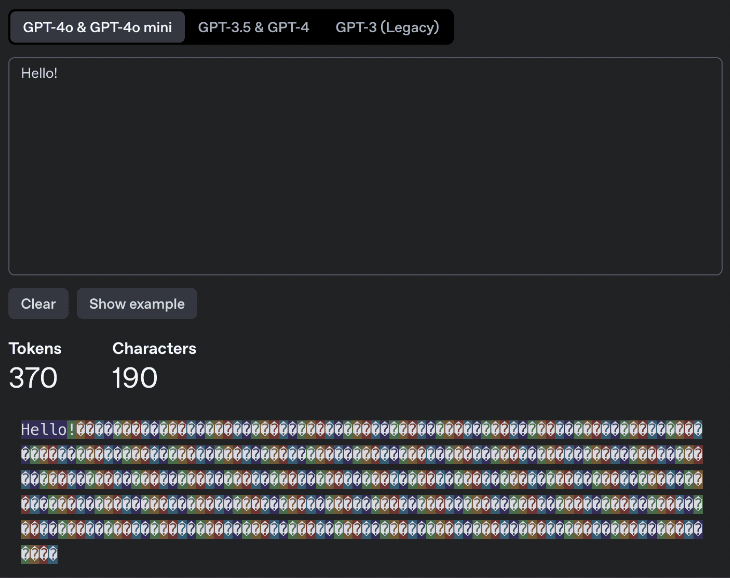

Dr. Sewak explains that a critical aspect of this vulnerability is what researchers term token segmentation bias. This occurs when an emoji is inserted into a word, causing the model to split it into different tokens. For instance, "sens😎itive" is interpreted as "sens," "😎," and "itive." This reshuffling alters the numerical representation the AI uses to understand meaning, allowing malicious inputs to appear benign. Emojis are now emerging as a potent tool in what security experts call prompt injection attacks. Safety filters in LLMs typically rely on pattern recognition to flag dangerous content. However, the subtle distortion that emojis introduce can help attackers bypass these filters.

The Emoji Attack: Bypassing AI Safety Protocols

The Emoji Attack exploits how AI models tokenize text. By strategically inserting emojis, researchers can disrupt normal token patterns. When an LLM processes text, it breaks it down into tokens. Due to their unique Unicode encoding, emojis create unexpected token combinations that the Judge LLMs are not trained to recognize. This leads to misinterpretation of the context, allowing harmful content to evade detection. The research team demonstrated that by embedding emojis in harmful phrases, they could create new tokens that the AI safety systems failed to flag. This technique successfully bypassed prominent safety systems like Llama Guard and ShieldLM, allowing a significant percentage of harmful content to slip through undetected.

Implications for Cybersecurity

AI system security faces several challenges, particularly in anticipating novel attack methods. Organizations must balance accessibility with robust protection. The Emoji Attack demonstrates that simple filtering solutions are often inadequate, as attackers can creatively bypass them. Businesses can enhance AI system safety through multi-layered security approaches, including regular audits and continuous updates on emerging vulnerabilities. GrackerAI's cybersecurity monitoring tools can help organizations identify such vulnerabilities in real-time, ensuring that AI systems remain effective and secure. Monitoring threats and producing timely, relevant content are essential for staying ahead in cybersecurity marketing.

Variations of Prompt Injection

Prompt Injection, a broader category that includes Emoji Attacks, targets LLMs by manipulating input prompts to produce unintended behavior. This can lead to revealing sensitive information or bypassing safety protocols. Variation Selectors, special Unicode characters, can control the appearance of characters and may be exploited to embed hidden messages in AI inputs. Using Unicode Variation Selectors, attackers can encode messages that remain undetectable under normal viewing conditions. This steganographic technique can mislead AI models, which often treat user input as direct instructions without validating the hidden content.

The Need for Enhanced Security Measures

Given the complexities of AI security vulnerabilities, organizations must adopt advanced strategies to mitigate risks. The "Black Box Emoji Fix" offers a multi-layered approach to prevent Unicode Injection Attacks in LLMs. It includes Unicode normalization and layered filtering to remove dangerous invisible characters, ensuring safer text handling. GrackerAI's AI-powered cybersecurity marketing platform helps organizations navigate these challenges by providing real-time insights into emerging threats and trends. Our services can assist in creating targeted marketing materials that resonate with cybersecurity professionals and decision-makers.

Explore GrackerAI's Services

To stay ahead of evolving threats and enhance your organization's cybersecurity marketing efforts, consider leveraging GrackerAI's innovative tools. Our platform automates insight generation from industry developments, allowing your marketing teams to focus on strategy while we handle the technical aspects. Visit GrackerAI at https://gracker.ai to explore our services or contact us for more information.

Latest Cybersecurity Trends & Breaking News

Akamai Firewall for AI Critical Microsoft Telnet Vulnerability: Technical Breakdown